Enterprise Install

Refer to the following sections to troubleshoot errors encountered when installing an Enterprise Cluster.

Scenario - Self-linking Error

When installing an Enterprise Cluster, you may encounter an error stating that the enterprise cluster is unable to self-link. Self-linking is the process of Palette or VerteX becoming aware of the Kubernetes cluster it is installed on. This error may occur if the self-hosted pack registry specified in the installation is missing the Certificate Authority (CA). This issue can be resolved by adding the CA to the pack registry.

Debug Steps

-

Log in to the pack registry server that you specified in the Palette or VerteX installation.

-

Download the CA certificate from the pack registry server. Different OCI registries have different methods for downloading the CA certificate. For Harbor, check out the Download the Harbor Certificate guide.

-

Log in to the system console. Refer to Access Palette system console or Access Vertex system console for additional guidance.

-

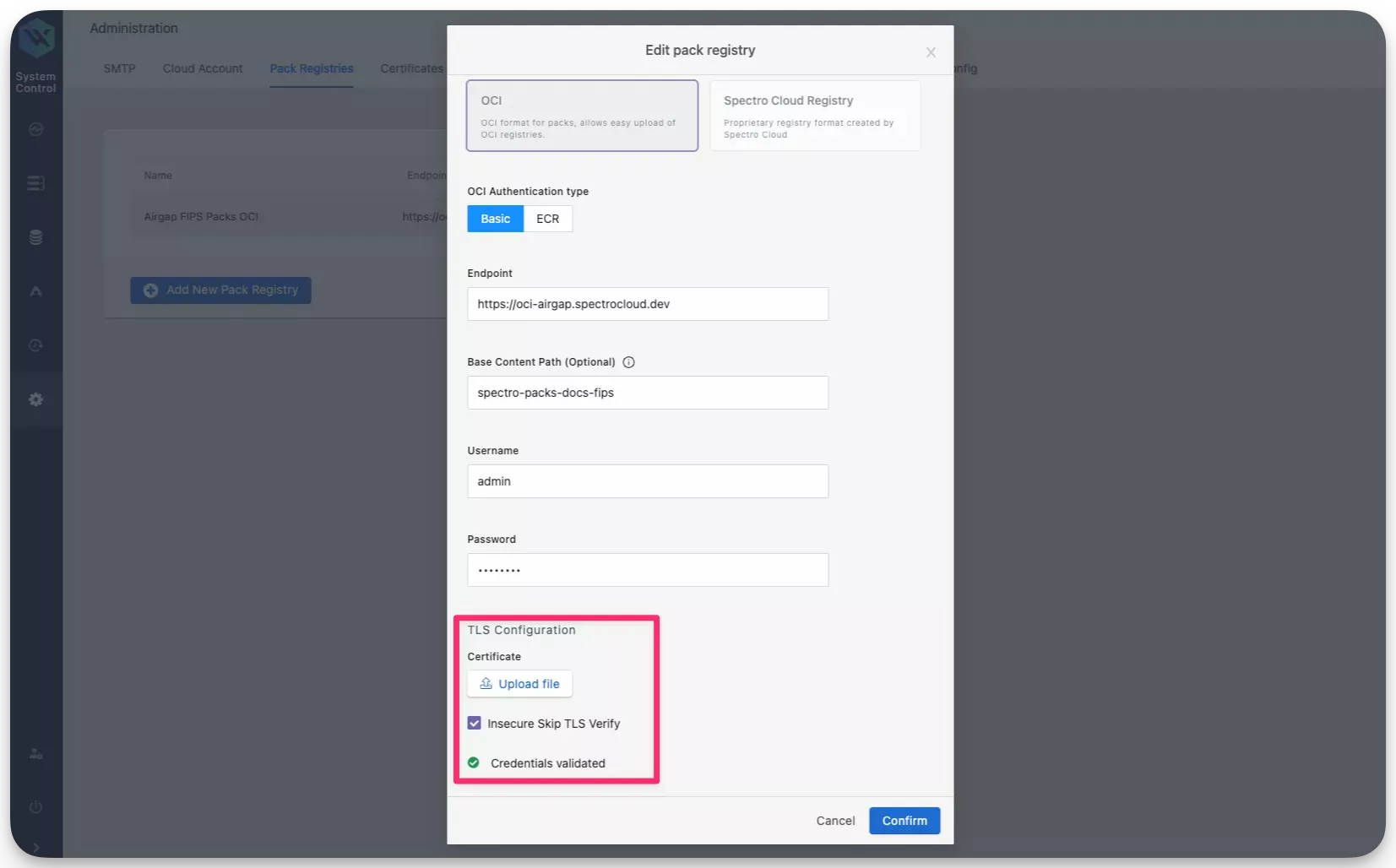

From the left navigation menu, select Administration and click on the Pack Registries tab.

-

Click on the three-dot Menu icon for the pack registry that you specified in the installation and select Edit.

-

Click on the Upload file button and upload the CA certificate that you downloaded in step 2.

-

Check the box Insecure Skip TLS Verify and click on Confirm.

After a few moments, a system profile will be created and Palette or VerteX will be able to self-link successfully. If you continue to encounter issues, contact our support team by emailing support@spectrocloud.com so that we can provide you with further guidance.

Scenario - Enterprise Backup Stuck

In the scenario where an enterprise backup is stuck, a restart of the management pod may resolve the issue. Use the following steps to restart the management pod.

Debug Steps

-

Open up a terminal session in an environment that has network access to the Kubernetes cluster. Refer to the Access Cluster with CLI for additional guidance.

-

Identify the

mgmtpod in thehubble-systemnamespace. Use the following command to list all pods in thehubble-systemnamespace and filter for themgmtpod.kubectl get pods --namespace hubble-system | grep mgmtmgmt-f7f97f4fd-lds69 1/1 Running 0 45m -

Restart the

mgmtpod by deleting it. Use the following command to delete themgmtpod. Replace<mgmt-pod-name>with the actual name of themgmtpod that you identified in step 2.kubectl delete pod <mgmt-pod-name> --namespace hubble-systempod "mgmt-f7f97f4fd-lds69" deleted

Non-unique vSphere CNS Mapping

In Palette and VerteX releases 4.4.8 and earlier, Persistent Volume Claims (PVCs) metadata do not use a unique identifier for self-hosted Palette clusters. This causes incorrect Cloud Native Storage (CNS) mappings in vSphere, potentially leading to issues during node operations and upgrades.

This issue is resolved in Palette and VerteX releases starting with 4.4.14. However, upgrading to 4.4.14 will not automatically resolve this issue. If you have self-hosted instances of Palette in your vSphere environment older than 4.4.14, you should execute the following utility script manually to make the CNS mapping unique for the associated PVC.

Debug Steps

-

Ensure your machine has network access to your self-hosted Palette instance with

kubectl. Alternatively, establish an SSH connection to a machine where you can access your self-hosted Palette instance withkubectl. -

Log in to your self-hosted Palette instance System Console.

-

In the Main Menu, click Enterprise Cluster.

-

In the cluster details page, scroll down to the Kubernetes Config File field and download the kubeconfig file.

-

Issue the following command to download the utility script.

curl --output csi-helper https://software.spectrocloud.com/tools/csi-helper/csi-helper -

Adjust the permission of the script.

chmod +x csi-helper -

Issue the following command to execute the utility script. Replace the placeholder with the path to your kubeconfig file.

./csi-helper --kubeconfig=<PATH_TO_KUBECONFIG> -

Issue the following command to verify that the script has updated the cluster ID.

kubectl describe configmap vsphere-cloud-config --namespace=kube-systemIf the update is successful, the cluster ID in the ConfigMap will have a unique ID assigned instead of

spectro-mgmt/spectro-mgmt-cluster.Name: vsphere-cloud-config

Namespace: kube-system

Labels: component=cloud-controller-manager

vsphere-cpi-infra=config

Annotations: cluster.spectrocloud.com/last-applied-hash: 17721994478134573986

Data

====

vsphere.conf:

----

[Global]

cluster-id = "896d25b9-bfac-414f-bb6f-52fd469d3a6c/spectro-mgmt-cluster"

[VirtualCenter "vcenter.spectrocloud.dev"]

insecure-flag = "true"

user = "example@vsphere.local"

password = "************"

[Labels]

zone = "k8s-zone"

region = "k8s-region"

BinaryData

====

Events: <none>